the theory (bio)

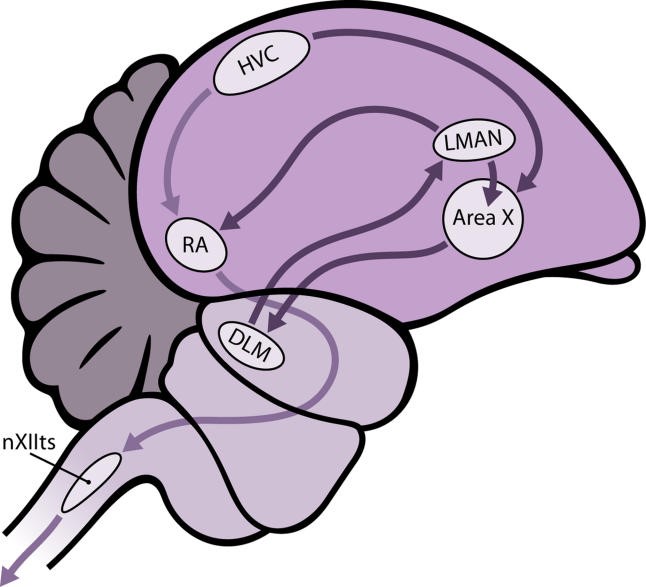

The hyperstriatum ventrale, pars caudalis (HVC), a nucleus in the brain of songbirds, motivates song learning and production through its projections to the arcopallium (RA). Long, Jin, and Fee’s proposed synaptic chain model of neuronal sequence generation (2010)1 accounts for this production. I examined how STDP might lead to the creation and maintenance of a synaptic chain, including the effects of variability introduced to RA from LMAN, the output nucleus of a basal ganglia forebrain circuit (Olveczky, 2011)2. To find out, I modeled the connections from HVC to RA with additional input from LMAN (Figure 1). This type of interconnectivity provides support for a mechanism of song learning in which birds retain patterns through a combination of imitation and variation (Nottebohm 2005)3.

essentially, what i did was model this subsystem of a two-way circuit (HVC to RA) with some external noise (LMAN) to aid in the learning.

the theory (ART)

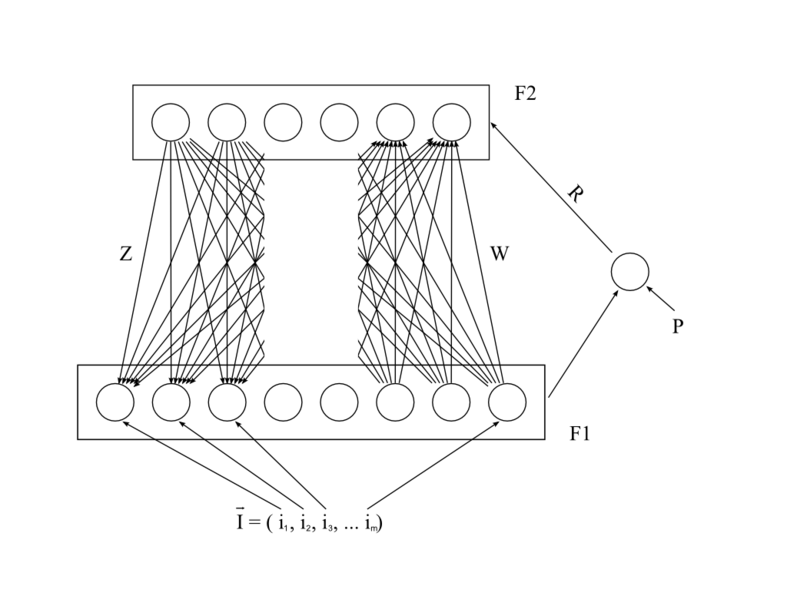

the learning model used by the bot is adaptive resonance theory (ART). it is a 2-layer model where an input is matched to a single best output, with the help of competitive inhibition in the output layer. after an input has been classified, the output is compared to a threshold value, if the threshold is overcome, training occurs and the weights of the inputs are strengthened; if the threshold is not reached, the output neuron is inhibited and the input is repeated.

how it works

starting with a recording, i splice it up into a sequence. for each part of the sequence, fourier transform the audio into frequency ranges corresponding to different notes. then, to actually learn the sequence, feed the last n number of notes into an ART circuit, wait for it to converge on the output of the correct next note and repeat until the entire song has been learned. then, feed the network the first couple of notes of your song and it should produce the rest.

as for the robot part, once a sequence of notes has been generated, i "compile" some arduino code (really just some boilerplate code and the sequence of notes converted to what frequencies to play, with some waits in between), upload it to the OWL-bot, hit the button and listen to an awful buzzing noise variation of the original recording.

Long, Michael A., Dezhe Z. Jin, and Michale S. Fee. "Support for a Synaptic Chain Model of Neuronal Sequence Generation." Nature 468.7322 (2010): 394-99.↩

Olveczky, Bence P., et al. “Changes in the neural control of a complex motor sequence during learning.” Journal of Neurophysiology 106 (2011): 386-397.↩

Nottebohm, Fernando. "The Neural Basis of Birdsong." PLoS Biology 3.5 (2005): E164.↩